Precision Inference with Jetson Orin™

Watch how the Inference Server for NVIDIA® Jetson AGX Orin™ & Jetson Orin™ NX empowers edge computing with unmatched performance. Explore real-world applications where advanced Al models are deployed with precision, speed, and efficiency.

Engineered to Meet Your Workplace Requirements

The Inference Server powered by NVIDIA® Jetson AGX Orin™ & Jetson Orin™ NX is designed to accelerate Al workloads across a diverse range of industries, offering innovative solutions for real-world challenges.

Click on a use case below to learn more

Managing multiple NVIDIA® Jetson AGX Orin™ development kits can quickly clutter labs and complicate workflows. The innovative 2U Inference Server eliminates the need for up to 24 individual dev kits, providing a scalable, centralized solution for AI development. Benefit from enhanced collaboration – a unified platform simplifies teamwork, enabling multiple developers to work simultaneously on the same server. Whether you’re prototyping, testing, or scaling up, this Jetson server makes it easier, faster, and more efficient.

For inspection and safety monitoring in mining, a customer sought to build a resilient system where no two lines relied on the same compute resources. By leveraging the Inference Server, they were able to decouple sensors from specific hardware, assigning them to one or more modules within the server. If a module becomes unstable, the workload seamlessly shifts to another module in the chassis, allowing monitoring to continue without any disruption to site operations.

The Inference Server delivers the ease of deployment and scalability essential for managing multiple camera streams. A customer needed to demonstrate its AI software by processing multiple IP camera streams at a major convention. Armed with nothing but access to the event site’s network and an Inference Server with 24 modules, the customer was able to access all indoor and outdoor IP camera feeds and began to provide AI insights the day they arrived on site.

This distributed computing method aims to put HPC clusters closer to the Edge for workloads where high performance is the main driver. By leveraging these systems, users maximize compute power for every watt of energy consumed. Some have even developed satellite-based supercomputers, requiring remote compute access from locations as far-reaching as space.

The Inference Server can be used in a multi-user role with Kubernetes for container orchestration. This method gives users dedicated access to computational environments and resources without the need to self-manage the environment. In this scenario, compute resources are dynamically allocated to a given team based on work requirements.

Multi-tenancy typically refers to multiple users sharing the resources of a single server by dividing physical resources into multiple virtual machines. The Inference Server can be used to create the same type of multi-user to one chassis deployment but with a dedicated physical server per user. The dedicated server (or module) approach allows for the same 1:1 setup without the performance and configuration overhead associated with virtualization. Universities have deployed the servers with this configuration, allowing individual GPU-capable development environments to be assigned to each student.

The Inference Server shines in medium-to-large-scale environments like smart cities, smart factories, retail spaces, and extractive industries. These dynamic applications rely on numerous IP-based devices and data sources for analytics and monitoring, where deploying bulky traditional GPU servers isn’t feasible. Instead, customers turn to the Inference Server, its scalable design allows them to seamlessly expand within the same chassis, keeping pace with tomorrow’s demands while maximizing efficiency.

Traditional manufacturing automation relies on separate computing and networking systems, adding complexity and cost while limiting scalability. The Inference Server combines AI processing with an integrated managed Ethernet switch to simplify infrastructure and enhance performance. The server’s Layer 2 switching and Layer 3 routing capabilities, 10G uplinks, TSN, and 1588v2 support enable real-time defect detection, synchronized robotics, and seamless coordination. This integration reduces the need for separate networking hardware, lowers costs, and ensures precise, low-latency communication, boosting efficiency and scalability in high-demand industrial environments.

Ready for Robotics and Hardware-in-the-Loop

For end-to-end robotics innovation, the Inference Server powered by Jetson AGX Orin™ and Jetson Orin™ NX delivers a high-performance solution for intensive Hardware-in-the-Loop (HIL) scenarios.

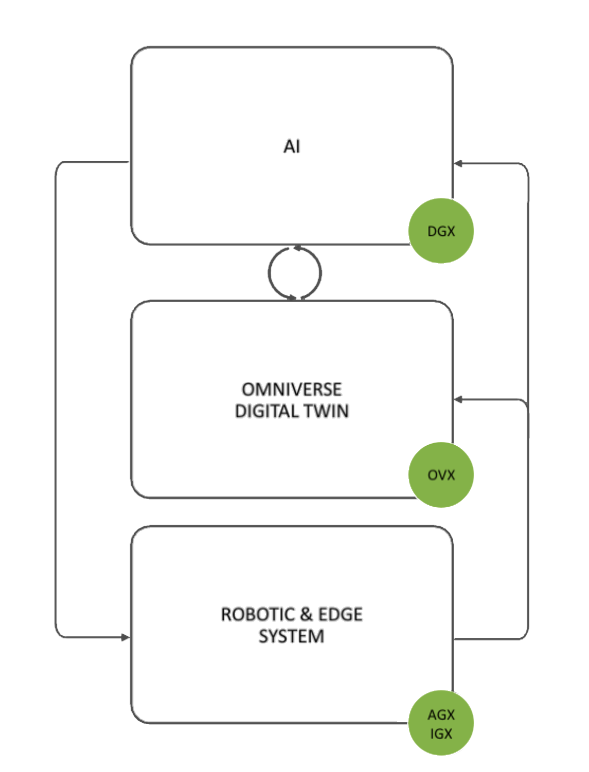

Designed to meet the demanding computational requirements of real-world robotics applications, the Inference Server provides a critical bridge in digital twin simulations. In a typical workflow, synthetic sensor data and robot simulations generated by an OVX server are processed on the Inference Server, where advanced AI models and robotics algorithms are deployed. Capable of handling data from multiple sensors—such as cameras, LiDARs, and IMUs—simultaneously, it overcomes the limitations of single-board solutions. The server efficiently relays computation results back to Isaac Sim™ for validation, enabling iterative development and testing without the need for physical assets. This approach accelerates workflow refinement, making the Inference Server a key driver for scalable AI and robotics development.

Source: NVIDIA®

Stay Up to Date on Connect Tech Products

Optimized for NVIDIA® JetPack™:

Simplified, Efficient AI Deployment

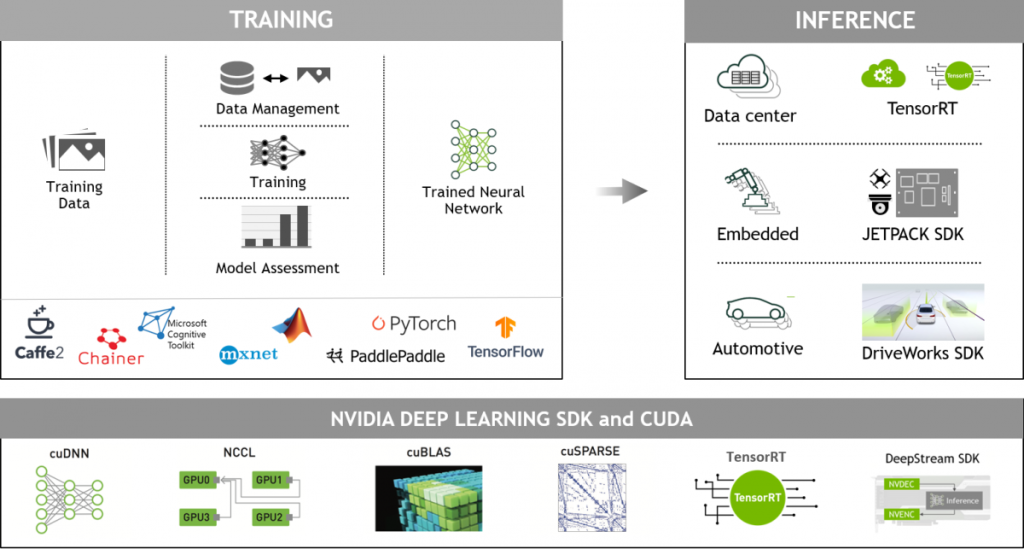

The Inference Server powered by Jetson AGX Orin™ & Jetson Orin™ NX is optimized for NVIDIA’s JetPack™ SDK, streamlining Al development and deployment. Pre-configured for seamless integration, it eliminates setup complexities, so you can focus on your Al tasks with enhanced performance and efficiency.