Inference Server, Powered by NVIDIA® Jetson Orin™ NX

More Information

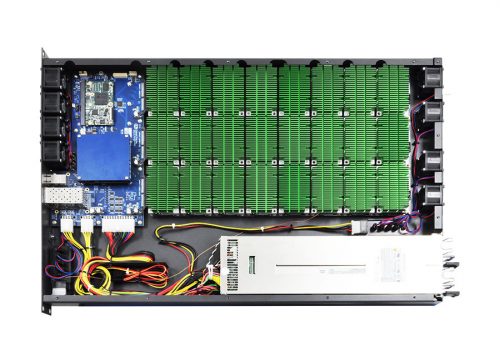

Housed in a 2U chassis, the Orin NX Inference Server can be outfitted with up to 24x NVIDIA Jetson Orin NX modules.

Developed in partnership with USES Integrated Solutions, the Orin NX Inference Server is an extremely low wattage, high performance deep learning inference server powered by the NVIDIA Jetson Orin NX 16GB module. Within the Server, three processor module carriers house up to eight Jetson Orin NX modules each, and are all connected via a Gigabit Ethernet fabric through a specialized Managed Ethernet Switch developed by Connect Tech with 10G uplink capability (XDG205).

Specifications

| Processing Modules | • 24x NVIDIA® Jetson Orin™ NX |

|---|---|

| Out-of-band Management Module | • ARM based OOBM |

| Processor Module Carriers | • Each module carrier will allow up to 8x Orin NX modules to be installed |

| Internal Embedded Ethernet Switch | • Vitesse/Microsemi SparX-5i VSC7558TSN-V/5CC Managed Ethernet Switch Engine (XDG205) |

| Internal Array Communication | • 24x Gigabit Ethernet / 1000BASE-T / IEEE 802.3ab channels |

| External Uplink Connections | • 4x SFP+ 10G uplink |

| Misc / Additional IO | 1x 1GbE OOB management port via RJ-45; 1x USB UART management port; status LEDs |

| Input Power | 100~240 VAC (dual redundant) with 1000W output each |

| Internal Storage | Each Orin NX module has its own M.2 NVMe interface |

| Operating Temperature | 0°C to +50°C (+32°F to +122°F) |

| Dimensions | Standard 2U rackmount height (3.5 inch / 88.9mm), 25 inch / 635mm depth |

Ordering Information

| Main Products | |

|---|---|

| Part Number | Description |

| UNGX2U-07 | Orin NX Inference Server – 2U Array, with 24x NVIDIA Jetson Orin NX 16GB, 24x 1TB NVMe SSD |

| UNGX2U-08 | Orin NX Inference Server – 2U Array, with 16x NVIDIA Jetson Orin NX 16GB, 16x 1TB NVMe SSD |

| UNGX2U-09 | Orin NX Inference Server – 2U Array, with 8x NVIDIA Jetson Orin NX 16GB, 8x 1TB NVMe SSD |

| UNGX2U-10 | Orin NX Inference Server – 2U Array, with 24x NVIDIA Jetson Orin NX 16GB, 24x 2TB NVMe SSD |

| UNGX2U-11 | Orin NX Inference Server – 2U Array, with 16x NVIDIA Jetson Orin NX 16GB, 16x 2TB NVMe SSD |

| UNGX2U-12 | Orin NX Inference Server – 2U Array, with 8x NVIDIA Jetson Orin NX 16GB, 8x 2TB NVMe SSD |

Custom Design

Looking for a customized NVIDIA® Jetson Orin NX™ Product? Click here to tell us what you need.